ENHANCING INSTANCE SELECTION WITH ENSEMBLE METHODS: A COMPARATIVE ANALYSIS OF ACCURACY AND DATA COMPRESSION ACROSS MULTIPLE ALGORITHMS

DOI:

https://doi.org/10.71146/kjmr553Keywords:

Instance Selection, Repeated Edited Nearest Neighbor, Clonal Selection Algorithm, Object Selection by clustering, Back PropagationAbstract

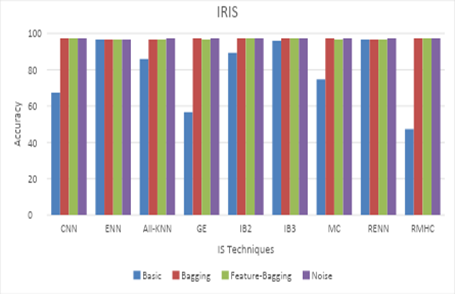

Instance selection is a crucial preprocessing step aimed at reducing the computational complexity of machine learning models, particularly when integrated with feature selection and dimensionality reduction techniques. This process enhances the overall prediction accuracy by selecting the most representative instances and removing redundant or irrelevant data points. While instance selection has been widely studied, the application of ensemble methods to instance selection remains underexplored in existing literature. Ensemble methods, known for their ability to combine multiple models to improve predictive performance, offer a promising avenue for refining instance selection strategies. In this study, we explore the efficacy of four ensemble strategies voting, feature bagging, Additive Noise, and Bagging when applied to a diverse set of nine instance selection algorithms: CNN, ENN, GE, IB2, IB3, All-KNN, RMHC, MC, and RENN. The goal is to determine how well these ensemble methods can enhance the performance of individual instance selection algorithms in terms of accuracy and compression efficiency. The experimental evaluation is conducted in three distinct phases. In the first phase, we focus on assessing the accuracy and data compression capabilities of each instance selection method on a specific dataset. This phase establishes a baseline performance metric for the instance selection algorithms and provides an understanding of how well they generalize across different types of data. The second phase involves evaluating the performance of the four ensemble strategies across six different datasets. This phase aims to assess the robustness of ensemble methods in improving the accuracy of instance selection algorithms, especially when dealing with diverse data characteristics. The ensemble methods are expected to offer a more stable and generalized solution compared to individual instance selection techniques, potentially leading to improved prediction results. In the final phase, we conduct a comparative analysis of the compression effectiveness of the nine instance selection algorithms, both individually and within the ensemble frameworks, across the six datasets. By comparing the compression ratios achieved by each method, we aim to identify the most efficient instance selection techniques that retain key information while reducing the size of the data for subsequent modeling. This analysis is critical for understanding the trade-off between computational efficiency and the preservation of valuable data.

Downloads

Downloads

Published

Issue

Section

License

Copyright (c) 2025 Muhammad Ans Khalid , Hira Batool, Abdulrehman Arif, Syed Zohair Quain Haider, Hassan Ahmad (Author)

This work is licensed under a Creative Commons Attribution 4.0 International License.